MarioNette: Self-Supervised Sprite Learning

Abstract

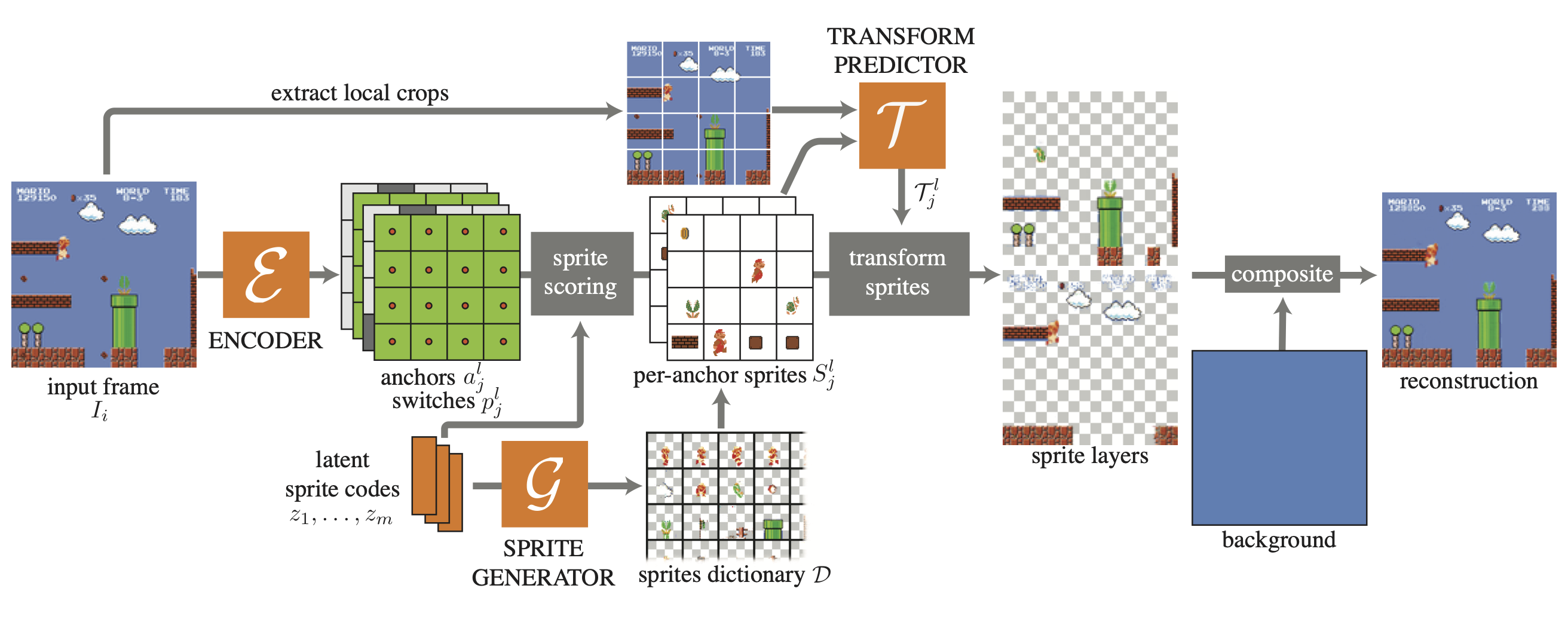

Artists and video game designers often construct 2D animations using libraries of sprites—textured patches of objects and characters. We propose a deep learning approach that decomposes sprite-based video animations into a disentangled representation of recurring graphic elements in a self-supervised manner. By jointly learning a dictionary of possibly transparent patches and training a network that places them onto a canvas, we deconstruct sprite-based content into a sparse, consistent, and explicit representation that can be easily used in downstream tasks, like editing or analysis. Our framework offers a promising approach for discovering recurring visual patterns in image collections without supervision.

Method

An overview of our method. We jointly learn a sprite dictionary, represented as a set of trainable latent codes decoded by a generator, as well as an encoder network that embeds an input frame into a grid of latent codes, or anchors. Comparing anchor embeddings to dictionary codes lets us assign a sprite to each grid cell. Our encoder also outputs an on/off switch probability per anchor to turn sprites on and off. After compositing, we obtain a reconstruction of the input. Our self-supervised training optimizes a reconstruction loss.

Video

Paper

D. Smirnov, M. Gharbi, M. Fisher, V. Guizilini, A. A. Efros, J. Solomon

MarioNette: Self-Supervised Sprite Learning

Conference on Neural Information Processing Systems (NeurIPS) 2021, online

OpenReview | BibTeX

@inproceedings{smirnov2021marionette,

title={{MarioNette}: Self-Supervised Sprite Learning},

author={Smirnov, Dmitriy and Gharbi, Micha\"el and Fisher, Matthew and Guizilini, Vitor and Efros, Alexei A. and Solomon, Justin},

year={2021},

booktitle={Advances in Neural Information Processing Systems}

}

Poster

Acknowledgements

The MIT Geometric Data Processing group acknowledges the generous support of Army Research Office grants W911NF2010168 and W911NF2110293, of Air Force Office of Scientific Research award FA9550-19-1-031, of National Science Foundation grants IIS-1838071 and CHS-1955697, from the CSAIL Systems that Learn program, from the MIT-IBM Watson AI Laboratory, from the Toyota-CSAIL Joint Research Center, from a gift from Adobe Systems, from an MIT.nano Immersion Lab/NCSOFT Gaming Program seed grant, and from the Skoltech-MIT Next Generation Program. This work was also supported by the National Science Foundation Graduate Research Fellowship under Grant No. 1122374.